When you’re faced with any decision, particularly a complex one, don’t you wish you had some sort of clear, repeatable process to help you make the best choice?

That’s where decision trees come in.

They take uncertainty and turn it into clarity, guiding you logically, step-by-step through choices until you land at the right outcome. Whether you’re mapping out a new workflow or building a predictive model, decision trees provide a simple, visual way to make logic-based decisions.

In this guide, we’ll show you how decision trees work, where they show up in the real world and how to build one.

Key takeaways

- Decision trees make logic visible. They turn complex choices into a step-by-step structure that clarifies the decision-making process for both individuals and teams.

- They’re versatile and widely used. Decision trees power everything from project workflows to predictive models, supporting both classification and regression tasks.

- They’re easy to build and interpret. You can sketch one manually or train one on data — and their simple design means people can actually understand what they’re looking at.

What is a decision tree?

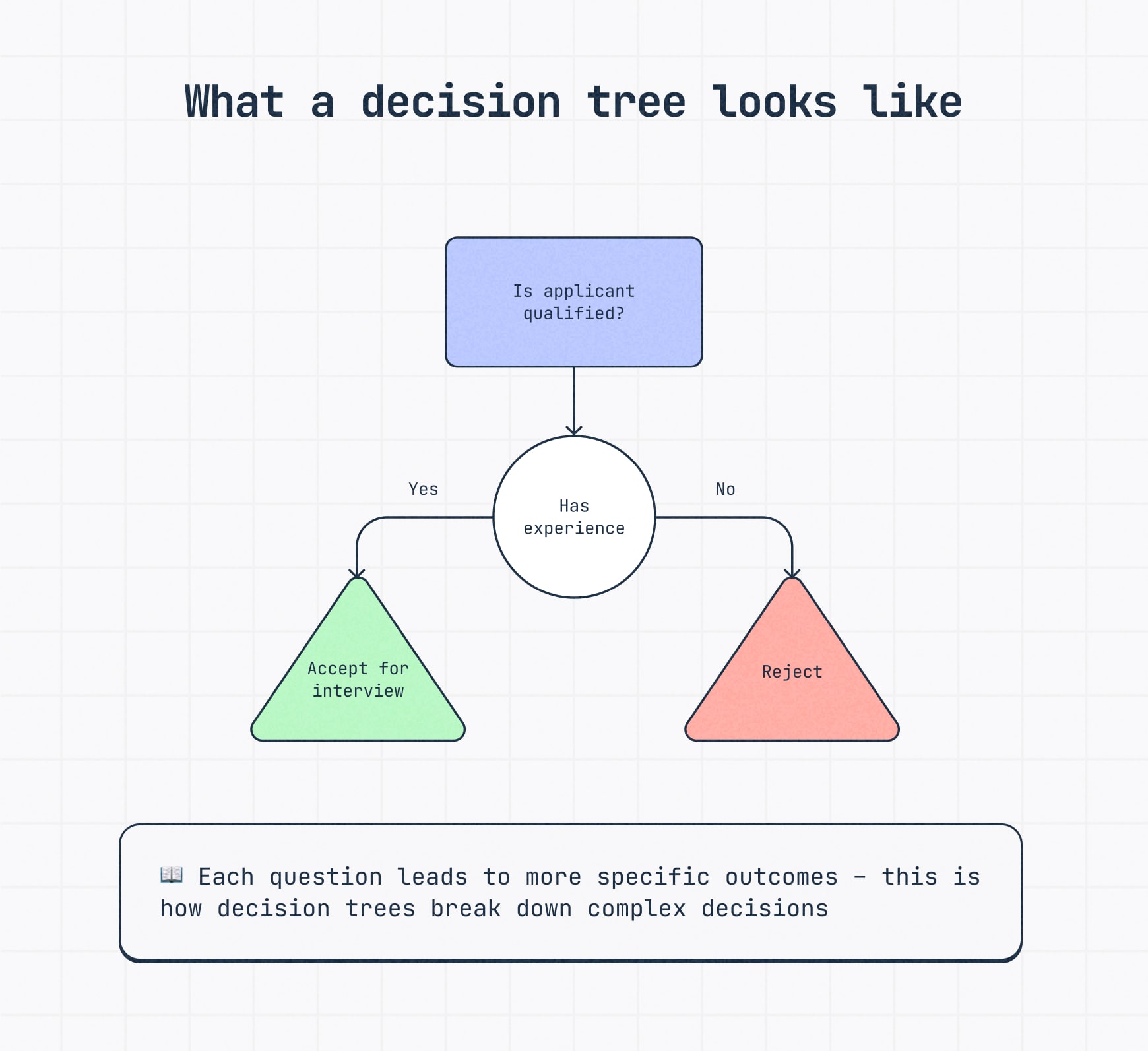

At first glance, a decision tree looks like a flow diagram — and it kinda, sorta is one. You’ll certainly notice some similarities if you’ve ever had to create a flowchart.

But it’s more than just arrows and boxes — it’s logic you can see.

Each branch represents a decision and every fork in the road leads to a new path based on a specific condition. Each internal node represents a test on an attribute and each leaf node represents a class label or final outcome.

If that sounded like gibberish, don’t worry, you can find detailed explanations below.

🎬 Learn what Slickplan can do!

We filmed a short video to show you exactly how to use Slickplan

Why decision trees matter

A good decision isn’t just about getting the right answer — it’s about knowing how you got there so you feel confident in that decision.

Decision trees and decision tree analysis help people and teams make smarter choices by laying out the internal logic step-by-step.

They’re especially handy when consistency is important — say, evaluating job applicants or handling a customer support question because a decision tree shows all potential outcomes and what to do in response.

They’re also powerful teaching tools. When everyone can see the "why" behind a choice, trust and alignment improve across the board.

Key terms and decision tree symbols

Before we get into examples and how-tos, let’s break the jargon down. You don’t need a PhD to grasp these, just a few clear definitions and a visual.

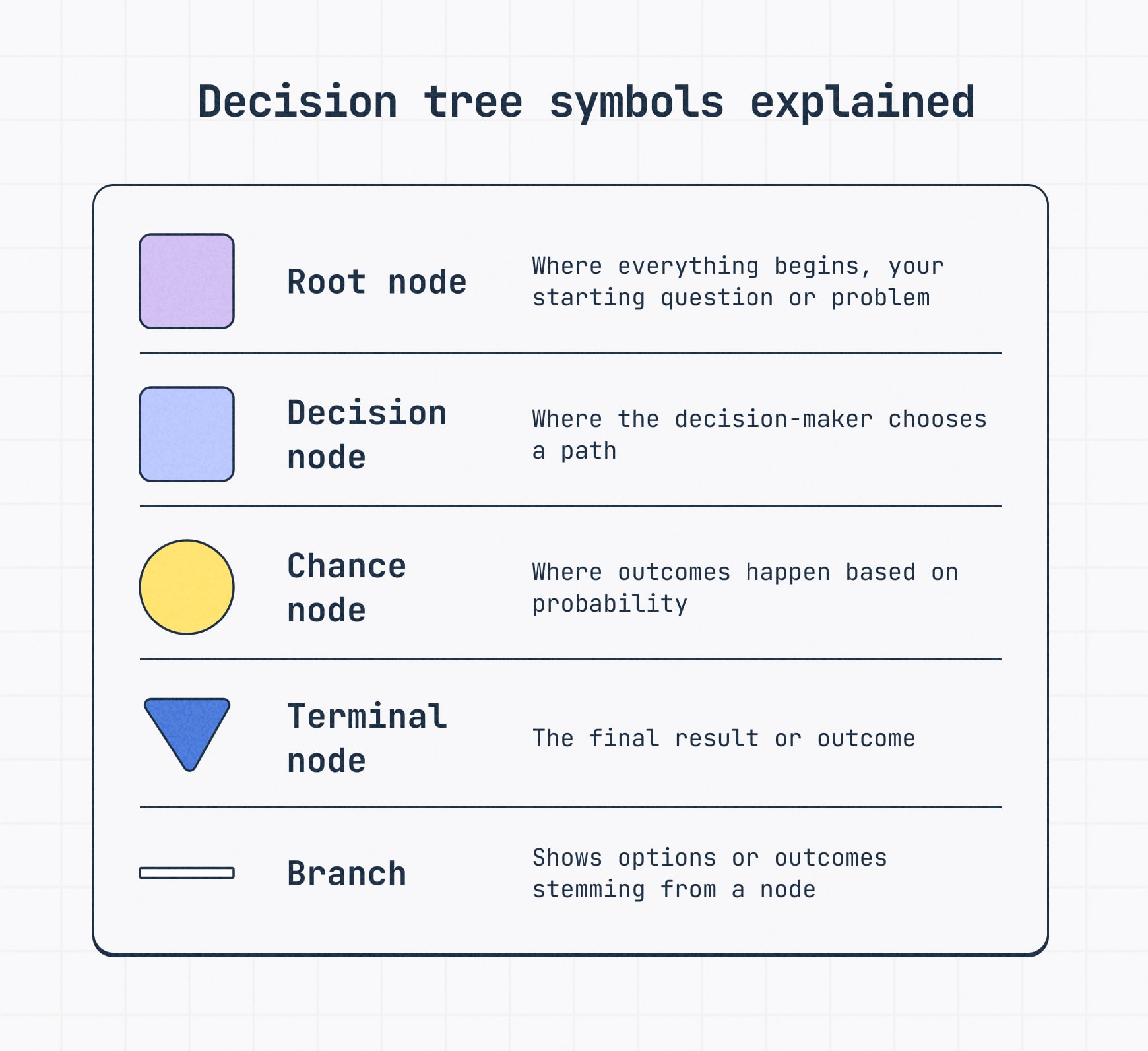

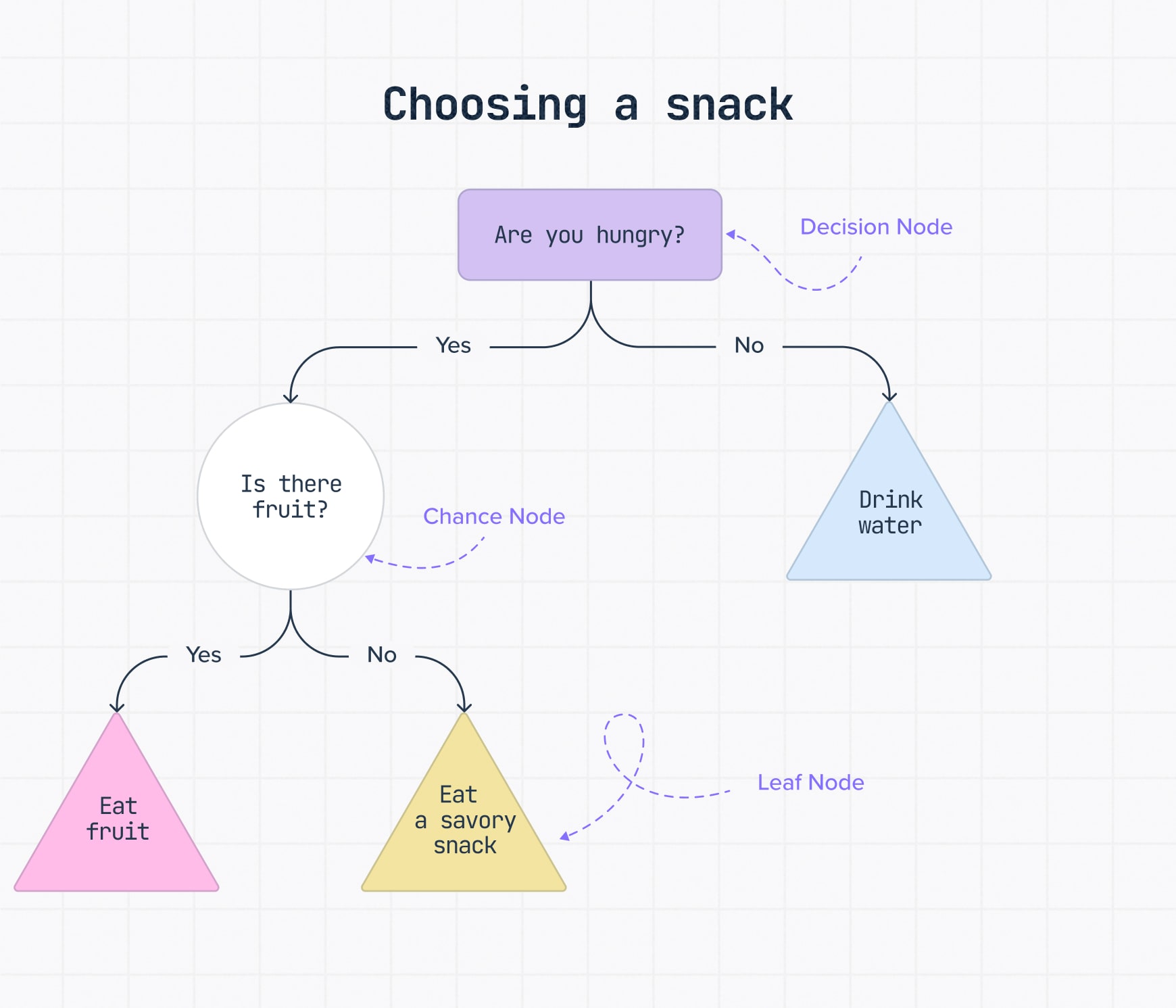

Decision tree symbols

The building blocks of your tree are a small batch of shapes and lines.

- Root node: The starting point of the decision tree, representing the main question or problem to be addressed.

- Decision node (or internal node): Points within the tree where a test is applied to split the data based on a specific feature.

- Chance node: A point in the tree representing an uncertain event with multiple possible outcomes, each associated with a probability.

- Leaf node (or terminal node): The end result, like "approve loan" or "reject." A leaf node represents the final outcome.

- Parent node: A node that splits into two or more child nodes.

- Child node: A node that has a parent preceding it, which can become a parent to the next node further along.

- Branches: The different options or decisions stemming from a node.

Key decision tree terms to know

Aside from the decision tree shapes, there are a handful of terms and ideas that are important to get a feel for.

- Entropy: A fancy word for uncertainty. High entropy means lots of unpredictability. Low entropy means the data is pretty clean-cut.

- Information gain: The difference in entropy before and after a split — this tells the model which feature creates the best, most informative split.

- Gini impurity: Another way of measuring how mixed the data is at a given node. It’s what helps decide which question to ask next.

- Overfitting: Learning the training data too well, leading to poor performance on new, real-world data.

- Pruning: Like trimming a tree, you cut off unnecessary branches to avoid overcomplicating the model and reduce overfitting.

- Categorical data: Qualities or characteristics that can be divided into distinct groups.

- Numerical data: Quantities or measurements that have a mathematical value and can be subjected to arithmetic operations.

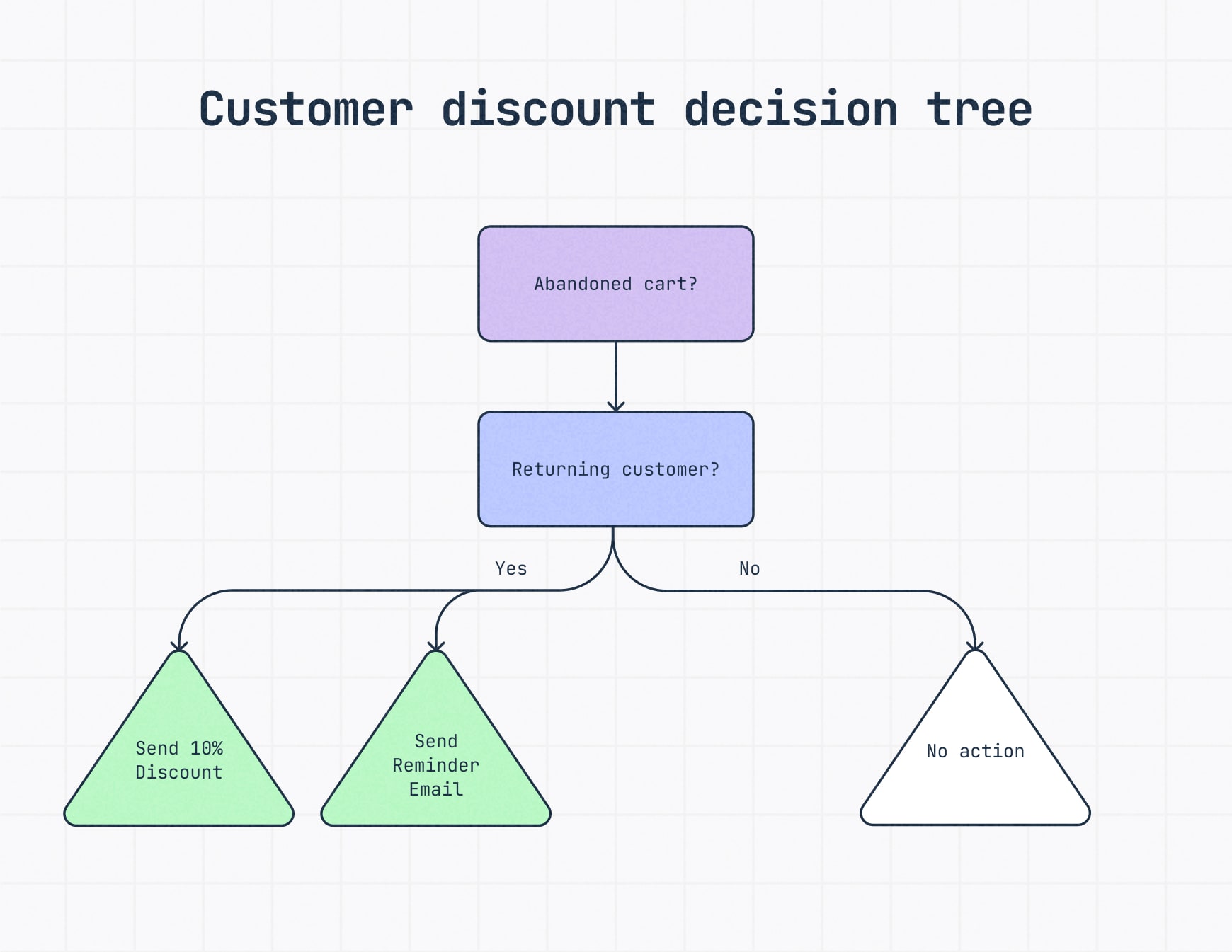

Here’s a quick and easy example: you’re deciding whether to offer a customer a discount.

You start with a basic yes-or-no — did they abandon their cart? From there, you might check if they’re a returning customer. Each layer of logic adds a branch, and the final result — maybe a 10% coupon and just a reminder email — is your "leaf."

That’s a decision tree in action: a visual map of how to get from input to outcome. This kind of tree structure helps reduce uncertain outcomes and make more informed decisions.

Types of decision trees and decision tree algorithms

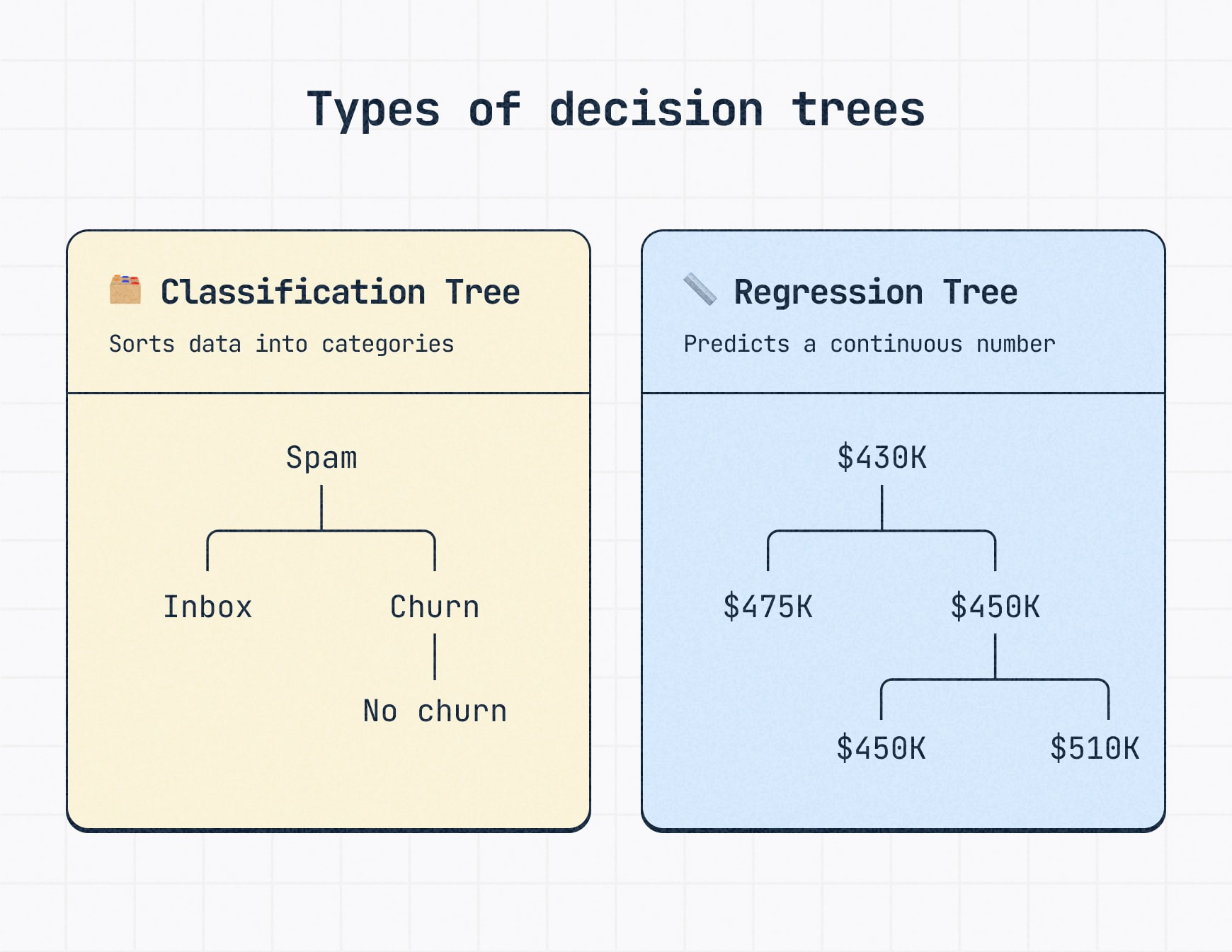

There are two core types of decision trees and which you use depends on the type of answer you’re trying to get.

Classification trees

These help sort data into categories. For example, should this email go to spam or the inbox? Is the customer likely to churn or stick around? A classification tree is typically used when the output variable is a class label.

Regression trees

These are used when your target variable is a number, like predicting house prices, delivery times or monthly revenue. Regression tasks require trees that return a continuous value at the leaf node.

Decision tree algorithms

If you’re building decision trees for machine learning, especially predictive tasks, algorithms are what work under the hood to guide the process. They determine how the tree splits data, chooses which questions to ask and balances accuracy with simplicity.

Most importantly, they’re what make decision trees trainable models, not just visual diagrams.

While flowchart tools are great for logic mapping, algorithms come into play when you’re working with large datasets, trying to predict outcomes or building models that learn from past data. They form the foundation of decision tree learning and are key to making the process scalable, efficient and effective.

- CART (Classification and Regression Trees): A workhorse algorithm that can handle both classification (sorting) and regression (predicting numbers).

- ID3 (Iterative Dichotomiser 3): An older, simpler algorithm mainly used for classification tasks.

- C4.5: Think of this as an improved version of ID3, also primarily for classification but more robust with different types of data.

- CHAID (Chi-square Automatic Interaction Detection): Often used for classification, especially when you have categories with multiple levels.

- MARS (Multivariate Adaptive Regression Splines): A more advanced type of regression tree that can handle more complex, non-linear relationships in the data.

- Random Forests: Instead of just one tree, this method builds a whole "forest" of them and combines their predictions for better accuracy in both regression and classification.

- Gradient Boosting Trees: Another powerful ensemble method that builds trees sequentially, with each new tree trying to fix the mistakes of the previous ones, leading to high accuracy.

Essentially, these algorithms are the step-by-step instructions that tell the computer how to learn the best questions to ask at each point in the tree to arrive at a useful prediction or classification.

A note on binary vs multi-way splits

Some trees are built like a series of yes/no questions. Others offer more than two paths at each decision point. Both approaches are valid, it just depends on your data and your goals.

In machine learning, for example, tree construction involves choosing features that maximize information gain while managing issues like imbalanced data. An optimal decision tree will minimize risk, balance performance and retain interpretability.

Real-world decision tree examples

Let’s look at how these are used in the wild.

Just like user flow diagram examples help map out ideal journeys in UX design, decision trees guide structured thinking in industries like marketing, HR, customer support, healthcare and more.

Why? Because they make complex decisions clear and repeatable.

In ML, decision tree learning is generally the foundation for building white box models capable of classification and regression tasks.

And in data science, they’re one of the first tools you’ll learn because they’re easy to understand, quick to build and incredibly useful. The decision tree model works by learning from training data and creating decision rules that accurately predict the target variable, even for unseen data.

Every day decisions example

This is a light example that would happen in a split second in your head, but it demonstrates the logic of how simple everyday decisions happen and that everything is a decision tree with many possible outcomes.

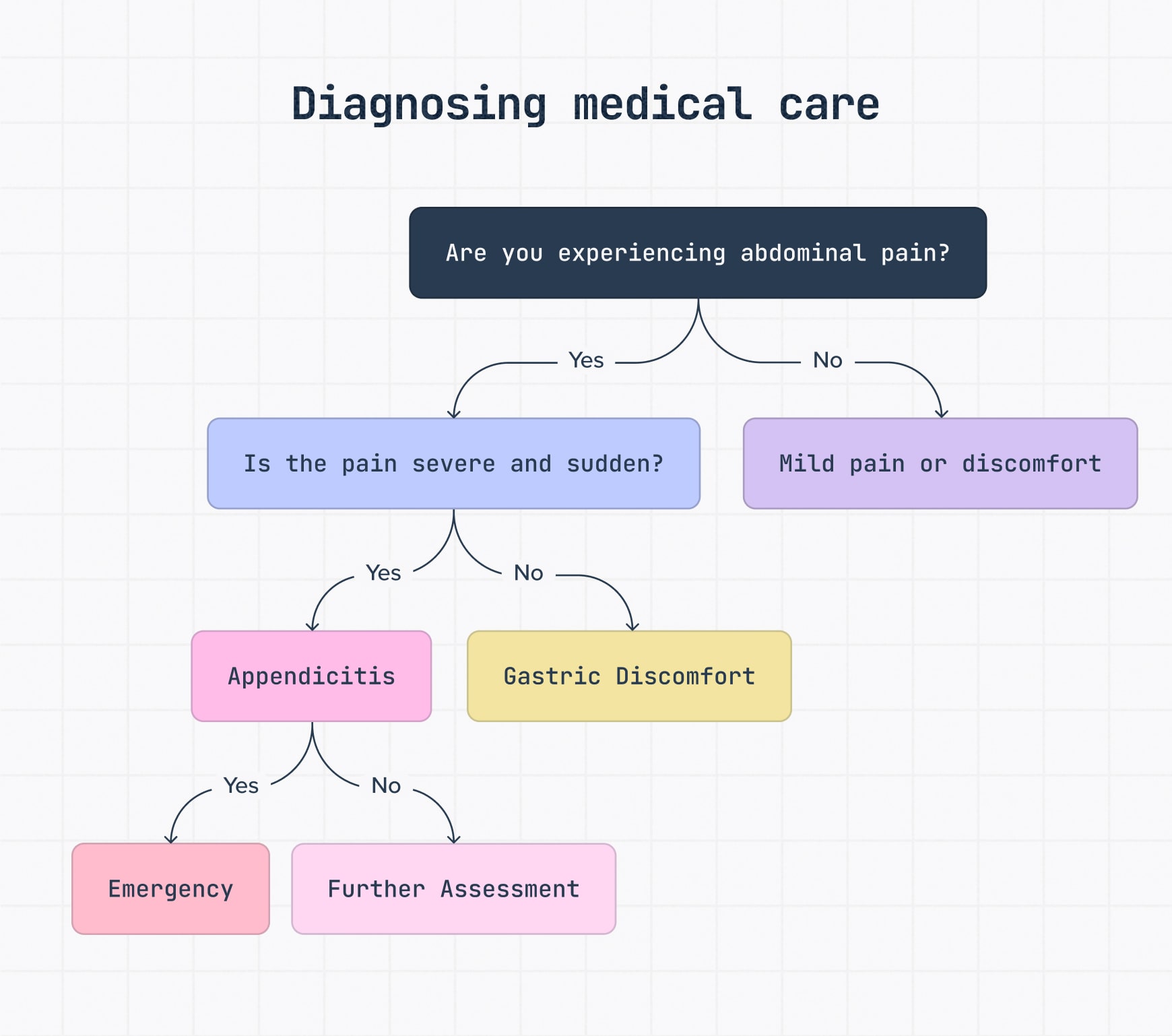

Medical example

In healthcare, decision trees can standardize responses in high-pressure situations.

This tree starts with a simple question: Is the patient experiencing abdominal pain?

If yes, we walk through severity, cause and treatment, potentially flagging for emergency care.

This flow supports fast triage and reduces decision-making errors in clinical settings.

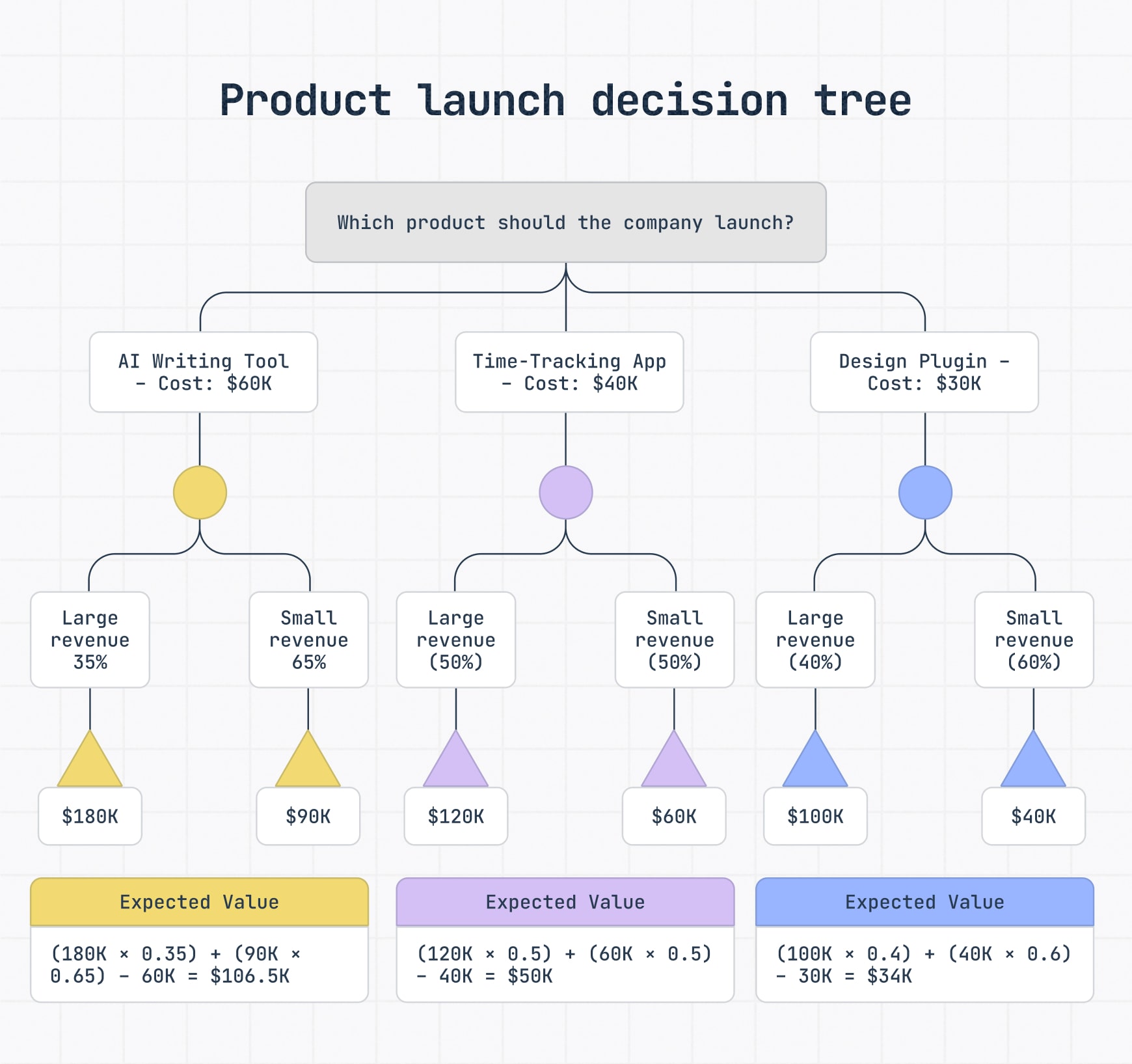

SaaS example

Decision trees for tech are a great way to get an idea of what a potential investment in upgrades, new tools, etc. might yield.

How to build a decision tree

Creating a decision tree can be as simple as sketching it on a whiteboard or using a tool like Diagram Maker and as technical as writing machine learning code (which we won’t cover in detail here because that’s best left to the programming pros).

The method you choose depends on your goals, data complexity and whether you’re working alone or with a team.

The simple, no-code approach

For processes, SOPs and non-data-driven use cases, decision tree software like Slickplan makes it easy with drag-and-drop interfaces and decision tree templates to help you begin analyzing decisions quickly.

Here’s a very basic overview:

- Define your core decision: Clearly state the central question or decision you’re trying to make. What’s the main issue you need a decision on? i.e., launch a campaign, approve a loan, etc. This is the starting point of your tree.

- Identify your choices: Determine the main options or alternatives available to you. What are the key paths you could take? These become the first branches of your tree.

- Map potential outcomes: For each option, think about the possible results or consequences. What are the likely outcomes if you choose this path? Add alternative branches as you go.

- Estimate values and continue branching: Assign a value, positive or negative, to each potential final outcome. Continue branching out from any subsequent decisions, mapping their potential outcomes, likelihood and expected values until all the outcomes are shown.

- Review and refine: Look over your entire diagram. Is the flow clear? Are all options and potential outcomes represented? Is the labeling concise and easy to understand? Make any necessary adjustments for clarity and completeness.

Check out our thorough step-by-step guide on how to create a decision tree for more details.

Coding approach

Naturally, this is going to require some computer science expertise, so we’re just going to cover the broad strokes:

- Define prediction task: Specify if you’re classifying categories, like loan approval, or predicting numbers, like sales. This sets your model’s goal.

- Organize your data: Data preparation is critical, load your dataset and split it into training (to learn) and testing (to evaluate) portions, prepping your model’s learning material.

- Train the tree model: Code a decision tree using a language like Python, where you can control complexity, number of levels, etc. and teach the model patterns in your data.

- Evaluate model performance: Check how well your trained tree predicts outcomes on the unseen testing data.

- Add new data: Use your trained decision tree to predict outcomes for new, incoming data points, where your model makes actual decisions or predictions.

Advantages and disadvantages of decision trees

There are several advantages and disadvantages to working with decision trees.

Let’s take a quick look at both.

Advantages

- Easy to interpret — Decision trees are visual and intuitive, making them easy to explain to any audience.

- Minimal prep needed — They work well without complex data cleaning or normalization.

- Transparent logic — They show how decisions are made, unlike black-box models.

- Versatile tasks — Use them for both classification and regression.

- Flexible input — Handle both categorical and numerical data with ease.

Disadvantages

- Prone to overfitting — Without pruning, trees can cling too tightly to training data and perform poorly in the real world.

- Less accurate than some models — Ensemble methods like Random Forest often outperform single trees.

- Can be unstable — Small data changes can lead to very different trees.

- Stepwise predictions — Regression trees don’t predict smooth trends, just blocky estimates.

- No continuous learning — Trees don’t update automatically; retraining is needed to reflect new data.

Making better decisions with decision trees

Decision trees aren’t just a technical tool – they’re kind of a mindset. Whether you’re dealing with structured data or concrete processes, they help you lay out your thinking, test assumptions and arrive at more informed, consistent outcomes.

From simplifying workflows and scaling hiring practices to training accurate machine learning models, decision trees adapt to the needs of your business — no matter the industry — and grow with your goals. They bring order to where there’s confusion, structure where there’s complexity and logic where decisions might otherwise rely on gut feeling alone.

So whether you’re drawing branches on a whiteboard or training a model in Python, you’re not just mapping decisions, you’re building a smarter way forward.

Think visually. Improve UX with Slickplan

Build intuitive user flows, stronger customer journeys and improve information architecture.

Decision tree FAQs

What is a leaf node vs an internal node in a decision tree?

An internal node is where a decision happens — it splits the data based on a condition. A leaf node is where the decision ends. It holds the final output, like a classification or prediction. Think of it as the answer after all conditions are tested.

Can decision trees deal with missing values in data?

Yes, decision trees can handle missing values pretty well. Some algorithms estimate the best split using available data, while others use surrogate splits. This allows them to make predictions even when parts of the data are incomplete or unknown.

Do decision trees work with both numbers and categories?

They do! Decision trees can split data using numerical values (like income > $50,000) and categorical values (like "yes" or "no"). This flexibility is one of the reasons they're used in so many industries – they adapt to real-world data easily.

What makes a decision tree optimal in machine learning?

An optimal decision tree strikes a balance between accuracy and simplicity. It's deep enough to capture useful patterns but not so complex that it overfits. Techniques like pruning and cross-validation help keep the tree focused on what really improves predictions.

What do the shapes in decision tree diagrams mean?

Shapes like diamonds and rectangles have meaning. A diamond often shows a decision point, while rectangles represent outcomes. These symbols help keep your decision tree diagram easy to read, especially when presenting logic to others or using decision tree templates.

![What is a decision tree? [Practical examples & use cases 2026]](https://cdn-proxy.slickplan.com/wp-content/uploads/2023/08/Decision_tree_cover.png)

X

X